The Mind of the Computer

A Convolutional Neural Network learns to classify an image. What is the computer doing when it does this feat of ‘recognition’ ? Is it something we understand?

Here is what we know. Digital images are submitted as inputs (grids of pixels, with RGB values, i.e., numbers). Then, various mathematical operations are performed, some hidden and some not, and by virtue of loss functions, gradient descent, back propagation, and so forth, the network is able to increase the probability of classifying a picture of a dog vs. a cat, a man vs. a woman, Robert vs. Peter, a frisbee, an arm, or what have you.

If I say to you, “Hey, toss me that pen, would you?” you know what I mean. I know you know, because then you toss me the pen sitting on the couch next to you. We interact with the world. The same is true of dogs, cats, men, women, Robert, Peter, a frisbee, and an arm: we interact with them and know what they are.

A computer has no such experience. So what is it doing to differentiate one object from another? In psychology this problem is known as visual constancy — how do I recognize something when it can be seen from many angles, at many distances, partially obscured or not, in the light, in the dark, etc.

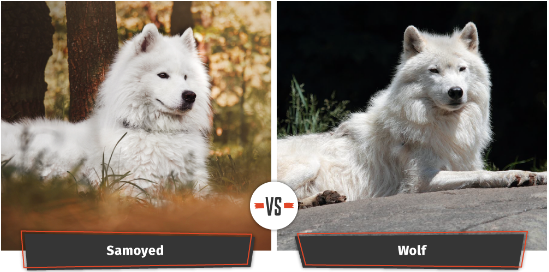

In this excellent paper by Yann LeCun, Yoshua Bengio, & Geoffrey Hinton, a computer is tasked with differentiating between a white wolf and a breed of wolf-like white dog called a Samoyed.

“With multiple non-linear layers, say a depth of 5 to 20, a system can implement extremely intricate functions of its inputs that are simultaneously sensitive to minute details — distinguishing Samoyeds from white wolves — and insensitive to large irrelevant variations such as the background, pose, lighting and surrounding objects.”

How does the machine know what is relevant and what isn’t? It does so by making its calculations and adjusting weights, matching its results to the labeled training set.

But the above description of irrelevant variations — background, pose, lighting, and surrounding objects — has meaning for us, but not for a computer. We can surmise what we see from our human standpoint to be ‘irrelevant factors’ but we really don’t know how many dimensions the computer has filtered out. 4? 10? 6,000?

What is it actually doing? Do we know?

I recall at a Singularity Summit, the lead of the Watson program/algorithm team was asked if he understood how Watson was arriving at the correct answer. He unhesitatingly answered, “No.”

It would appear that there is much to be gained by thinking about what it is that we actually do when we recognize and generalize, whether about images or ideas.